Some thoughts on what I have learnt by working in a new company that is building software. A lot of what you "should" do is the wrong thing to do. Here are some reflections on building a firm in San Francisco.

Prospects First

Speaking to prospect firms will get you further, faster than speaking to venture capital firms. Firms that have pain points will pay for solutions and they won't care so much how many other firms have the same pain point. Venture capital firms are interested in size of market, size of outcome, probability of success, experience of the team. Answering a VC's questions won't necessarily help you build a product and a business. If you can't afford to build the software that will answer the pain point you are trying to solve, then work out what you can build and how you can bridge the gap using other means.

Perform The Process By Hand, Before Writing Code

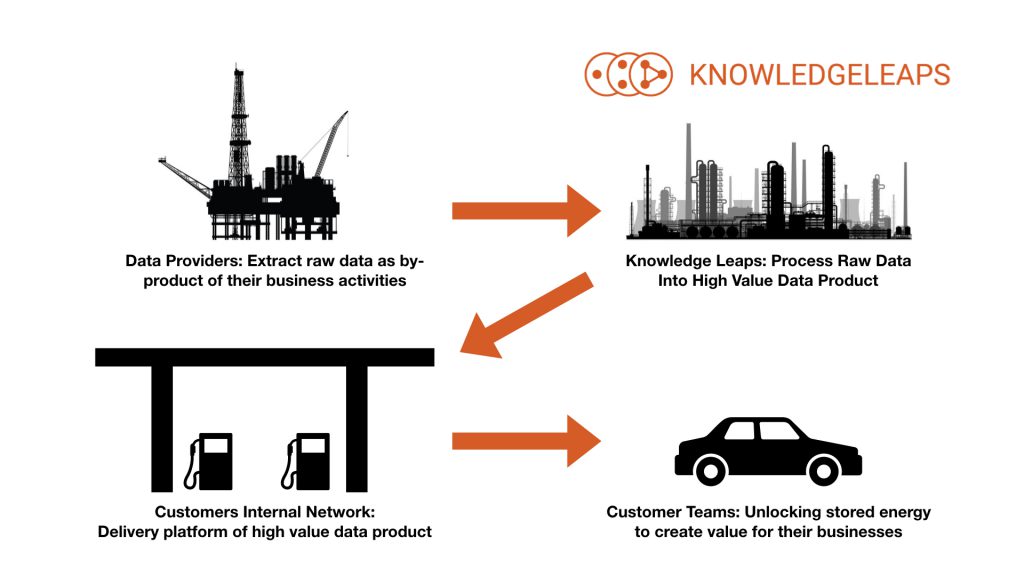

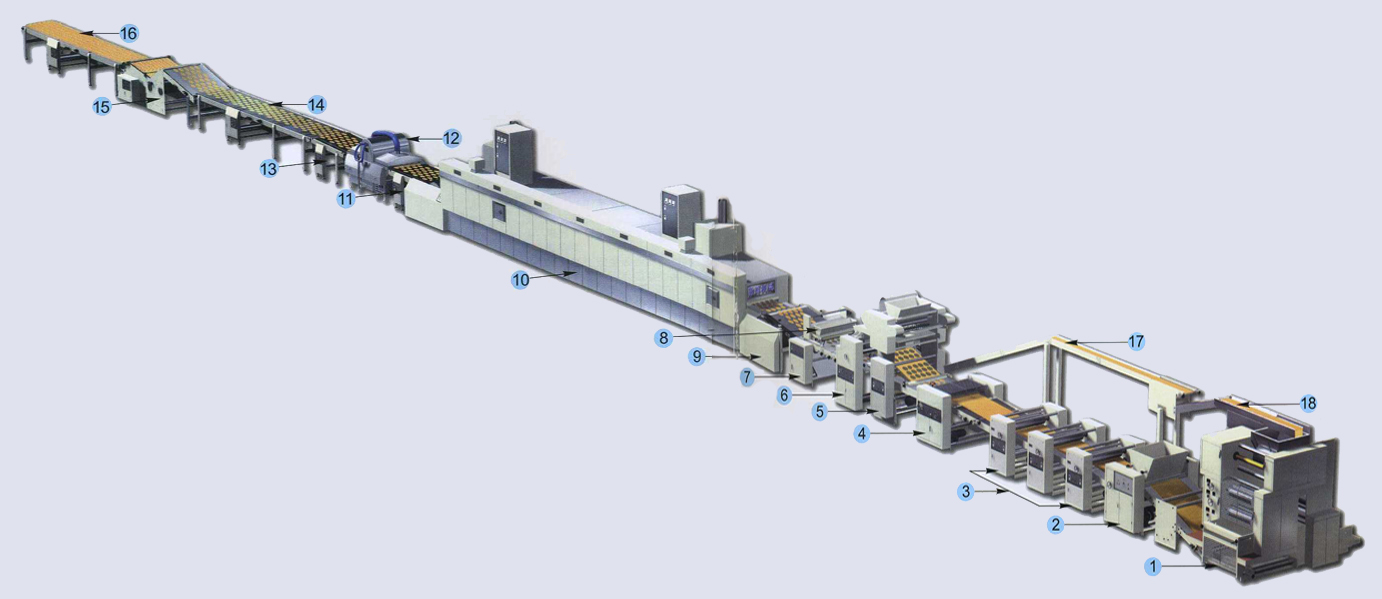

The best business software is first cut-by-hand like the first machine screw. If your software replaces a human-business-process and you can't afford to build the software, ask yourself 'how much can my firm afford to build?'

Most processes have the same elements: Task Specification, Task Execution, Present Results. The most complex part of this is Task Execution as this will require a lot of code and a lot of investment. As your company speaks to firms work out if it is possible to use humans to perform the complex Task Execution element. If you think it is then you should build a software architecture and framework that allows humans to do the hard work at first. This will help you refine the use-case and build more effective and efficient code. This also wouldn't be the first time this has been done, see here and here for more background.

A useful piece of military wisdom is worth keeping in mind; no plan survives first contact with the enemy. While customers are certainly not the enemy, the sentiment still holds. It's not until you put your plan in to action and have firms use your product that you realize its true strengths and weaknesses. Here begins the process of iterating product development.

"Speak to people, we might learn something"

This is what my business development lead says a lot. He also asks questions that get customers and prospects talking. In these moments you will learn about the firm, the buyer, the competition, and lots of other information that will make your product and service better.

"We are just starting out"

This is another useful mantra. In lots of ways we do not know where our journey will take us. It is part inspired by company vision but also customer feedback. In Eric Beinhocker's book, The Origin of Wealth, he likens innovation to the process of searching technology-solution-space, an innovation map, looking for high points (that correlate with company profits and growth). The important part of this search process is customer feedback. What your company does determines you starting point on the innovation map, how your firm reacts to customer and market feedback determines which direction you will go in, and ultimately will be a critical factor in its success.