I had heard about the phrase "do the dogs eat the dog food" from a start-up podcast I had listened. The idea being if your firm is building a product for customers, does your firm also use it.

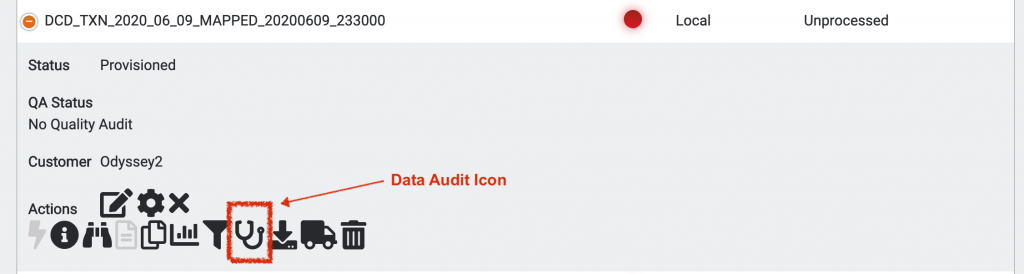

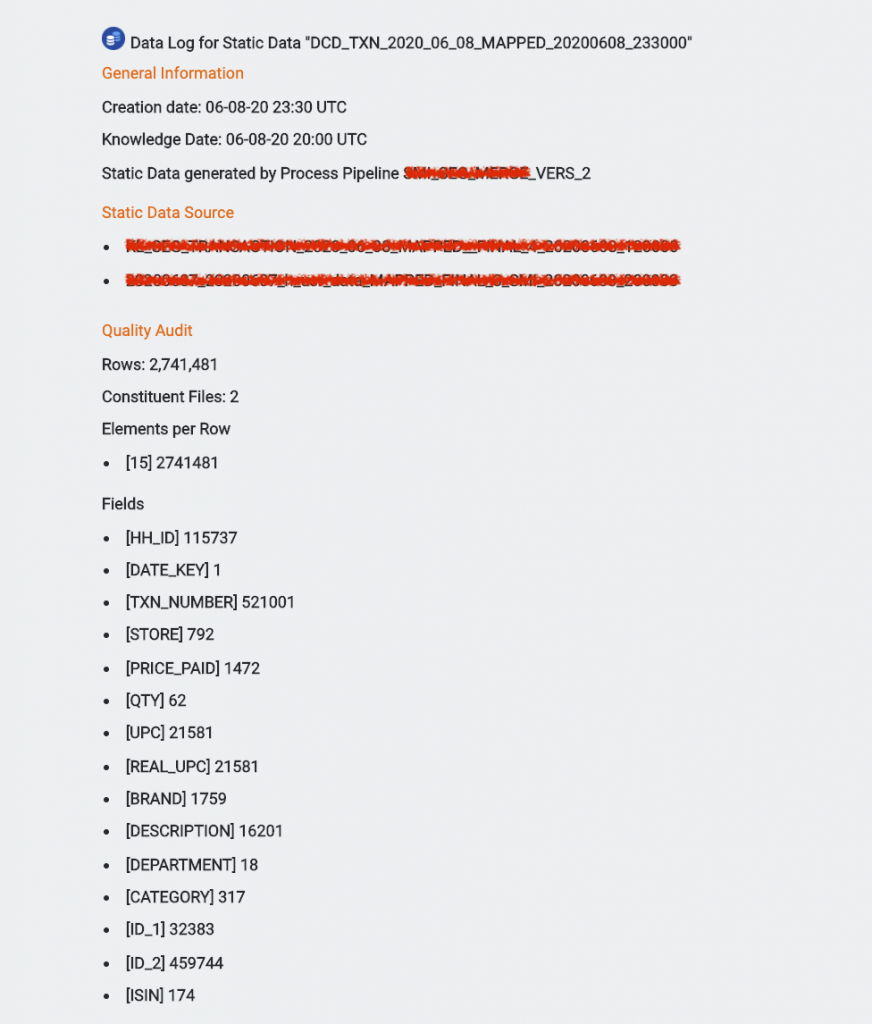

I then read this adaption of the phrase and thought it applies to us. We ship features and code that help our customers and that help us do our jobs better. We make "dog food" and we eat it. So, if the UI for a new feature is clunky or an implementation doesn't quite hit the mark, we know about because our team will tell us.